VIDEOS AND PUBLICATIONS

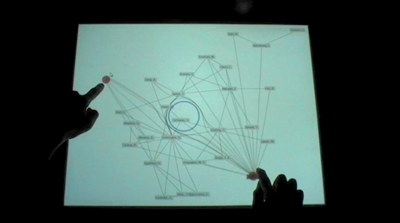

A Set of Multi-touch Graph Interaction Techniques

2013-08-07 - Download video

Interactive node-link diagrams are useful for describing and exploring data relationships in many domains such as network analysis and transportation planning. We describe a multi-touch interaction technique set (IT set) that focuses on edge interactions for node-link diagrams. The set includes five techniques (TouchPlucking, TouchPinning, TouchStrumming, TouchBundling and PushLens) and provides the flexibility to combine them in either sequential or simultaneous actions in order to address edge congestion.

APDT

2013-08-07

To help overcome the difficulties inherent in planning meetings for agile teams, We used Microsoft Visual Studio 2008, XAML and Microsoft Windows Presentation Foundation and a digital tabletop to create AgilePlanner for Digital Tabletop (APDT). With its large, horizontal touch sensitive device and adaptability to multiple platforms, APDT helps connect remote developers to a specially separated environment through multimodal interaction, including: finger touch or mouse events; gesture recognition; handwriting recognition; and voice-command recognition. Shown at the 2008 Agile Software Developer Conference, APDT received very positive reviews and feedback. APDT was developed by the agile Software Engineering group at the University of Calgary.

ASE & TT

2013-08-07

ActiveStory: Enhanced (ASE) and TT are tools created by the Agile Software Engineering Group at the University of Calgary. ActiveStory is a tool for designing and performing usability testing on an application in a manner that is inline with Agile principles. Designers can sketch UIs, add interactions and export the design to the Internet via a built in web Wizard of Oz system. TT enables testing of user interfaces in a robust, lightweight manner. It works by monitoring UIAutomation events raised during exploratory testing and turning these into editable scripts, which can be compiled into executable C# test files. These two tools can be used in conjunction to develop a prototype UI and click through this prototype to generate UI tests. These tests can then be run on the actual software under development. In other words, used together, AS:E x TT = UITDD

ASPECTS - ASsets Planning Employing Collaborative Tabletop Systems

2013-08-07

ASPECTS is an interactive tabletop software prototype developed by the Collaborative Systems Laboratory at the University of Waterloo, in conjunction with Defence Research & Development Canada (DRDC) – Atlantic (Halifax, NS), and Gallium Visual Systems (Ottawa, ON). ASPECTS is the first step in the ongoing development of an experimental platform for investigating the use of tabletop interfaces to support planning and decision-making over dynamic, geospatial data in complex task domains, such as defence and security. ASPECTS users can view, manipulate, and share dynamically updated map-based information in a windowing environment optimized for tabletop systems. The system provides the ability to easily rotate, move, and resize windows, as well as access system menus from anywhere around the table. ASPECTS is designed to run on a Anoto digital pen tabletop hardware platform, that provides unique user tracking, enabling interface tailoring for role-based interactions or security-level enforcement. The main project contact is Dr. Stacey Scott at the University of Waterloo.

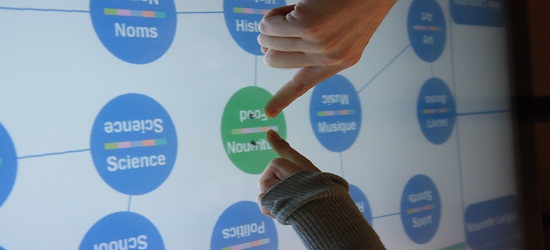

Augmenting Tandem Language Learning with the TandemTable

2013-10-06 - Publication

Abstract

In this paper, we present our computer-assisted language learning system called TandemTable. It is designed for a multi-touch tabletop and is meant to aid co-located tandem language learners during their learning sessions. By suggesting topics of discussion, and presenting learners with a variety of conversation-focused collaborative activities with shared digital artifacts, the system helps to inspire conversations and help them flow.

Figure 1: Tandem language learners selecting a topic of discussion.

Venue

-

ITS’13, October 6-9, 2013, St. Andrews, United Kingdom.

-

ACM 978-1-4503-2271-3/13/10.

Researchers

-

Erik Paluka – http://www.erikpaluka.com/

-

Christopher Collins – http://vialab.science.uoit.ca/portfolio/christopher-m-collins

Demo of a "home-made" table

2013-08-07 - Publication

Demo of a “home-made” table by Craig Anslow at Victoria University of Wellington. Craig was a student of Robert Biddle, and the movie was shot by Philippe Kruchten, during his stay at Victoria University in February-March 2010.

Digital Tables and Walls

2013-08-07 - Publication

Video produced by CBC, Canada and aired December, 2007 on the CBC Television Network.

EquisFTIR Input Toolkit for Optical Tabletops

2013-08-07

Fast and accurate touch detection is critical to the usability of multi-touch tabletops. In optical tabletops, such as those using the popular FTIR and DI technologies, this requires efficient and effective noise reduction to enhance touches in the camera’s input. Common approaches to noise reduction do not scale to larger tables, leaving designers with a choice between accuracy problems and expensive hardware. This video demonstrates our novel noise reduction algorithm as embedded within the EquisFTIR input toolkit. The algorithm provides better touch recognition than current alternatives, particularly in noisy environments, without imposing higher computational cost. This work was performed by Chris Wolfe, Nick Graham and Joey Pape at Queen’s University.

Exploring Entities in Text with Descriptive Non-photorealistic Rendering

2013-02-27 - Publication

We present a novel approach to text visualization called descriptive non-photorealistic rendering which exploits the inherent spatial and abstract dimensions in text documents to integrate 3D non-photorealistic rendering with information visualization. The visualization encodes text data onto 3D models, emphasizing the relative significance of words in the text and the physical, real-world relationships between those words. Analytic exploration is supported through a collection of interactive widgets and direct multitouch interaction with the 3D models. We applied our method to analyze a collection of vehicle complaint reports from the National Highway Traffic Safety Administration (NHTSA), and through a qualitative study, we demonstrate how our system can support tasks such as comparing the reliability of different models, finding interesting facts, and revealing possible causal relations between car parts.

Game Sketching with Raptor

2013-08-07

Today’s game development tools are ill-equipped to handle development of tomorrow’s games. Today’s video game industry follows a “technology-driven” development process, where heavy focus is placed on the construction of game assets that present a super-realistic experience for the player. Raptor is a tool that examines interactions designed to support design-driven development. The tool has the following features: tabletop interaction with Microsoft Surface naturally supports local synchronous collaboration; End-user development techniques such as “interaction graphs” reduce the amount of training required to use the tool; reduced effort is required to create playable prototypes, which encourages play-testing throughout the development process. This work was performed by David Smith and Nick Graham at Queen’s University.

Game Sketching with Raptor

2013-08-07

This video shows Joey Pape (Queen’s University’s) surface implementation of the popular Pandemic board game.

Improving Awareness of Automated Actions using an Interactive Event Timeline

2015-06-29 - Publication

In collaborative complex domains, while automation can help manage complex tasks and rapidly update information, operators may be unable to keep up with the dynamic changes. To improve situation awareness in co-located environments on digital tabletop computers, we developed an interactive event timeline that enables exploration of historical system events, using a collaborative digital board game as a case study.

Investigating Attraction and Engagement of Animation on Large Interactive Walls in Public Settings

2013-10-06

Victor Cheung and Stacey D. Scott. 2013. Investigating attraction and engagement of animation on large interactive walls in public settings. In Proceedings of the 2013 ACM international conference on Interactive tabletops and surfaces ITS, ACM, New York, NY,USA, 381-384.

Marquardt, N. and Greenberg, S. (2015) Proxemic Interactions: From Theory to Practice. (Series: Synthesis Lectures on Human-Centered Informatics, John M. Carroll, Ed., Ed.) 199 pages Morgan-Claypool,

2015-03-02

This is a major deliverable – a book describing almost all work done by my group on developing the notion of Proxemic Interactions. The abstract is below.

In the everyday world, much of what we do as social beings is dictated by how we perceive and manage our inter-personal space. This is called proxemics. At its simplest, people naturally correlate physical distance to social distance. We believe that people’s expectations of proxemics can be exploited in interaction design to mediate their interactions to devices (phones, tablets, computers, appliances, large displays) contained within a small ubiquitous computing ecology. Just as people expect increasing engagement and intimacy as they approach others, so should they naturally expect increasing connectivity and interaction possibilities as they bring themselves and their devices in close proximity to one another. This is called Proxemic Interactions. This book concerns the design of proxemic interactions within such future proxemic-aware ecologies. It imagines a world of devices that have fine-grained knowledge of nearby people and other devices – how they move into range, their precise distance, their identity and even their orientation – and how such knowledge can be exploited to design interaction techniques. The first part of this book concerns theory. After introducing proxemics, we operationalise proxemics for ubicomp interaction via the Proxemic Interactions framework that designers can use to mediate people’s interactions with digital devices. The framework, in part, identifies five key dimensions of proxemic measures (distance, orientation, movement, identity, and location) to consider when designing proxemic-aware ubicomp systems. The second part of this book applies this theory to practice via three case studies of proxemic-aware systems that react continuously to people’s and devices’ proxemic relationships. The case studies explore the application of proxemics in small-space ubicomp ecologies by considering first person-to-device, then device-to-device, and finally person-to-person and device-to-device proxemic relationships. We also offer a critical perspective on proxemic interactions in the form of ‘dark patterns’, where knowledge of proxemics may (and likely will) be easily exploited to the detriment of the user.

Multi-touch tabletop games

2013-08-07

Students from the University of Calgary Computer Science dept programmed two classic games on a SMART digital table: Pong and De-tangle.

PhysicsBox: Playful Educational Tabletop Games

2013-08-07 - Download video

We present PhysicsBox, a collection of three multi-touch, physics-based, educational games. These games, based on concepts from elementary science have been designed to provide teachers with tools to enrich lessons and support experimentation. PhysicsBox combines two current trends, the introduction of multi-touch tabletops into classrooms and research on the use of simulated physics in tabletop applications. We also provide a Java library that supports hardware independent multi-touch event handling for several tabletops.

Proxemic Interaction

2013-08-07

In the everyday world, much of what we do is dictated by how we interpret spatial relationships. This is called proxemics. What is surprising is how little spatial relationships are used in interaction design, i.e., in terms of mediating people’s interactions with surrounding digital devices such as digital surfaces, mobile phones, and computers. Our interest is in proxemic interaction, which imagines a world of devices that has fine-grained knowledge of nearby people and other devices – how they move into range, their precise distance, and even their orientation – and how such knowledge can be exploited to design interaction techniques. In particular, we show how we used proxemic information to regulate implicit and explicit interaction techniques. We also show how proxemic interactions can be triggered by continuous movement, or by movement in and out of discrete proxemic regions. We illustrate these concepts with the design of an interactive vertical display surface that recognizes the proximity of surrounding people, digital devices, and non-digital artefacts – all in relation to the surface but also the surrounding environment. Our example application is an interactive media player that implicitly reacts to the approach and orientation of people and their personal devices, and that tailors explicit interaction methods to fit.

Social Theories and ITS Workshop (SurfNet Annual Workshop, Oct 2014)

2014-10-22 - Download video

There is an increasing trend in the human-computer interaction (HCI) and interactive tabletop and surfaces (ITS) communities towards the application of social theories describing human and social behaviour into our technology designs. For example, social theories that describe how people utilize different spatial distances to engage in different types of interactions with others, called Proxemic Zones of Personal Space, have been appropriated by ITS researchers to create new forms of interactive surface interactions, called proxemic interactions. Beyond proxemics, there are a multitude of useful social theories that ITS researchers can and should utilize in their system interfaces and interaction designs to better leverage potential users’ existing social and spatial interaction practices in order to improve the effectiveness and usability of their systems. This tutorial will provide a brief overview of seminal social theories, and provide examples of how these theories can be applied to the design of interactive surfaces.

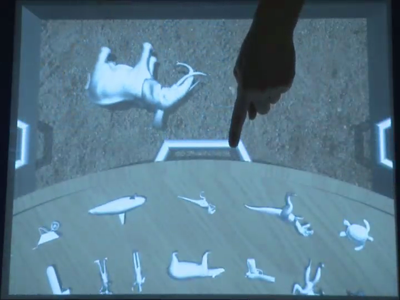

Supporting Sandtray Therapy on an Interactive Tabletop

2013-08-07 - Download video

We present the iterative design of a virtual sandtray application for a tabletop display. The purpose of our prototype is to support sandtray therapy, a form of art therapy typically used for younger clients. A significant aspect of this therapy is the insight gained by the therapist as they observe the client interact with the figurines they use to create a scene in the sandtray. In this manner, the therapist can gain increased understanding of the client’s psyche. We worked with three sandtray therapists throughout the evolution of our prototype. We describe the details of the three phases of this design process: initial face-to-face meetings, iterative design and development via distance collaboration, and a final face-to-face feedback session. This process revealed that our prototype was sufficient for therapists to gain insight about a person’s psyche through their interactions with the virtual sandtray.

SurfNet eBook - Designing Digital Surface Applications

2016-03-14 - Download video

This book illustrates the work of SurfNet researchers and their contributions to the state of the art by providing a selection of chapters covering the full spectrum of SurfNet research.

Section 1 presents work from Theme 1 and includes an overview on SurfNet work in this area written by Drs. Carpendale and Scott.

The next section discusses results from Theme 2 and Drs. Biddle and Schneider contributed a summary of this theme.

Drs. Graham and Gutwin summarize research from Theme 3 and Section 3 of this book contains selected work from this theme.

Section 4 presents a set of application-focused papers.

Surface Ghosts: Promoting Awareness during Pick-and-Drop Transfer in Multi-Surface Environments

2015-06-29 - Publication

This video demonstrates a new visual feedback design, called Surface Ghosts, to improve awareness of objects being moved between two networked multi-touch computer devices, in the context of a digital card game. An iterative design process led to the design of our Surface Ghosts design, which take the form of semi-transparent ‘ghosts’ of the transferred objects displayed under the “owner’s” hand on the tabletop during content transfer.

Tabletop Military Simulation

2013-08-07

We are developing alternative methods of controlling simulations using digital tabletop computers. We believe that tabletop interaction will be faster and easier to learn, and will make it easier for interactors to work collaboratively. The Equis Army Simulation shows the battlefield on a map projected onto the top of a conference table. Interactors can move tanks and soldiers by simply dragging along the desired path of travel on the map. The map can be panned in any direction using swiping gestures with a pen or mouse and can be zoomed in or out using a context-based menu shown by touching a pen on the table. Interactors sit around the table, and can all interact with the system concurrently. The proximity of the tabletop setting makes collaboration natural. This work was performed by Andrew Heard and Nick Graham at Queen’s University.

The Continuous Interaction Space: Integrating Gestures Above a Surface with Direct Touch

2013-08-07 - Publication

The advent of touch-sensitive and camera-based digital surfaces has spawned considerable development in two types of hand-based interaction techniques. In particular, people can interact: 1) directly on the surface via direct touch, or 2) above the surface via hand motions. While both types have value on their own, we believe much more potent interactions are achievable by unifying interaction techniques across this space. That is, the underlying system should treat this space as a continuum, where a person can naturally move from gestures over the surface to touches directly on it and back again. We illustrate by example, where we unify actions such as selecting, grabbing, moving, reaching, and lifting across this continuum of space.

The Proximity Toolkit and ViconFace

2013-08-07

Proximity Toolkit is a toolkit that simplifies the exploration of interaction techniques based on proximity and orientations of people, tools, and large digital surfaces. ViconFace is a playful demonstration application built atop of this toolkit. A cartoon face on a large display tracks a person moving around it, where it visually and verbally responds to that person’s proximity, orientation and wand use. The accompanying video illustrates all this in action.

TouchRAM - A Multi-Touch Enabled Aspect-Oriented Modelling Tool

2013-08-07

TouchRAM is an multi-touch enabled, aspect-oriented modelling tool that makes agile software design exploration possible. The video below shows a demonstration of the prototype of Spring 2012. It already supports multi-touch enabled editing of structural views of RAM aspects (UML class diagrams), as well as touch-enabled specification of aspect bindings. This allows a modeller to reuse (models of) existing design solutions in the model under development. Model weaving was not supported by the prototype.

TouchRAM - Reusing Software Design Models

2013-09-22

The following video shows the state of TouchRAM in July 2013. In addition to the features provided by our first prototype, TouchRAM now supports:

- - True multi-touch allowing multiple users to edit a model simultaneously

- - Structural view weaving (i.e. class diagrams)

- - Message view weaving (i.e. sequence diagrams)

TouchRAM - Teaser Trailer for MODELS 2013 Tool Demo Presentation

2013-10-02

This is the trailer that advertized our TouchRAM tool presentation at the International Conference on Model-Driven Engineering Languages and Systems – MODELS 2013, in Miami, Florida, on October 2nd 2013.

Using Multiple Kinects to Build Larger Multi-Surface Environments

2013-09-16 - Publication

Multi-surface environments integrate a wide variety of different devices such as mobile phones, tablets, digital tabletops and large wall displays into a single interactive environment. The interactions in these environments are extremely diverse and should utilize the spatial layout of the room, capabilities of the device, or both. For practical multi-surface environments, using low-cost instrumentation is essential to reduce entry barriers. When building multi-surface environments and interactions based on lower-end tracking systems (such as the Microsoft Kinect) as opposed to higher-end tracking systems (such as the Vicon Motion Tracking systems), both developer and hardware issues emerge. Issues such as time and ease to build a multi-surface environment, limited range of low-cost tracking systems, are reasons we created MSE-API.

What Caused That Touch

2013-08-07

The hand has incredible potential as an expressive input device. Yet most touch technologies imprecisely recognize limited hand parts (if at all), usually by inferring the hand part from the touch shapes. We introduce the fiduciary-tagged glove as a reliable, inexpensive, and very expressive way to gather input about: (a) many parts of a hand (fingertips, knuckles, palms, sides, backs of the hand), and (b) to discriminate between one person’s or multiple peoples’ hands. Examples illustrate the interaction power gained by being able to identify and exploit these various hand parts.

eGrid

2013-08-07

This research project is done by Elaf Selim and Frank Maurer, in the University of Calgary, in collaboration with TRLabs and Dextrus Prosoft. eGrid is a system which allows a collaborative team to view, annotate and manage GIS maps on a multi-touch digital tabletop display. This demo will present the latest prototype of eGrid using Microsoft Surface. Research suggests that multi-touch digital tabletop displays are more suitable for allowing teams to work collaboratively, especially in domain areas such as managing digital maps. eGrid presents a convenient digital environment for co-located teams to manage and analyze digital maps, without the need to print extremely large map data sets on paper. eGrid is useful for control center operations of utility companies, airports and transportation companies. It currently connects to an open GIS server provided by ESRI and it displays satellite views or street maps.

eHome

2013-08-07

eHome is an application developed by the agile Software Engineering group at the University of Calgary. eHome allows users to control and monitor various devices around the home, as well as items in containers using RFID technology. The application also monitors various sensors placed in the home. Since eHome was designed using agile Product Line Engineering, it can be deployed on digital tables (e.g. Microsoft Surface, SMART tables) as well as machines with vertical displays.

iLocator

2013-08-07

iLocator is a collaborative educational mapping game for children developed on Microsoft Surface. This game encourages players to collaborate with each other to correctly place cards in their corresponding real-world locations on a large-scale map.