SurfaceMusic: Mapping Virtual Touch-based Instruments to Physical Models

This is a completed project.

Description

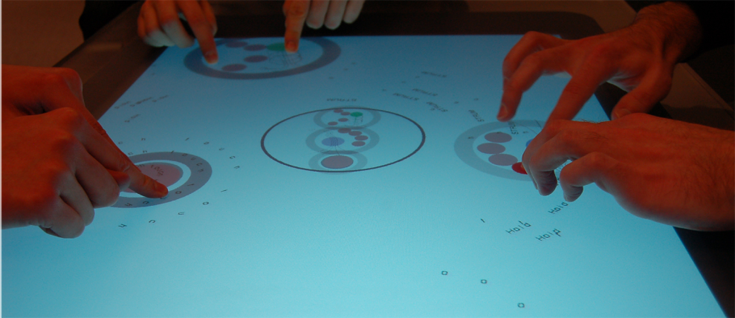

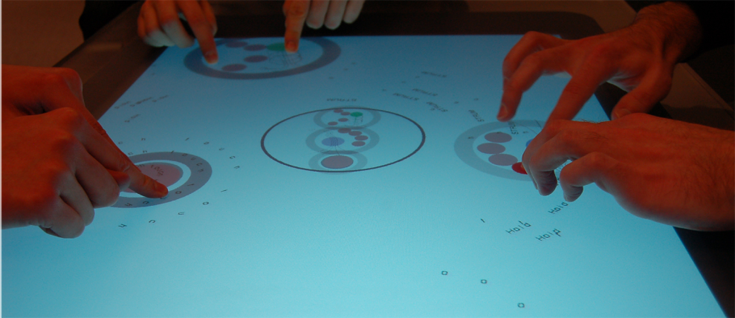

We are developing a new form of tabletop music interaction in which touch gestures are mapped to physical models of instruments. With physical models, parametric control over the sound allows for a more natural interaction between gesture and sound. We discuss the design and implementation of a simple gestural interface for interacting with virtual instruments and a messaging system that conveys gesture data to the audio system.

Images and Videos

Partners

NSERC – AITF – SMART Technologies – CFI

|

|

|